AI Agents in Production (Part 3): Multi Agent Systems

MLOps.WTF Edition #25

Ahoy there 🚢,

Last time in our agents in production series we looked at workflows, and why agent systems need structure, tracing, and evaluation once they’re running in production. That was about keeping execution understandable.

This week the focus shifts to design. Even with good workflow discipline, there’s a point where a single agent is carrying too many responsibilities in one loop: interpreting the request, choosing tools, retrieving information, and composing the response. At that stage, adding more structure is not always enough.

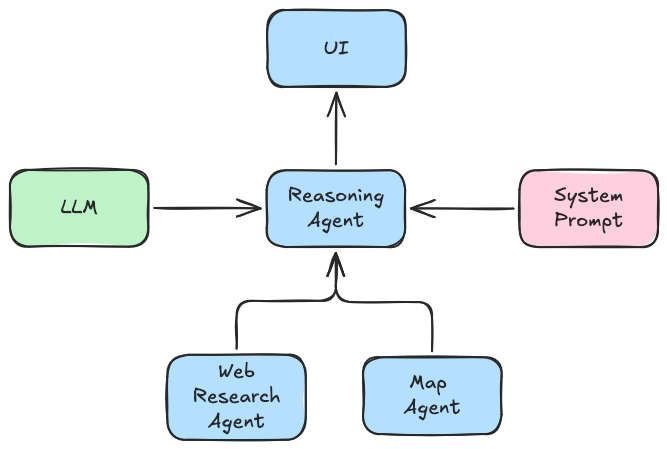

Misha explores a practical alternative: splitting capabilities across specialised agents, letting them delegate to each other, and introducing clearer boundaries between responsibilities. He also looks at what changes once those agents need to collaborate across systems, including protocols like Agent2Agent (A2A)…

“Just give the LLM more tools.”

What starts as a simple helping chat bot quickly becomes a single agent with an ever-growing tool belt. In an effort to give the agent greater capabilities we give it more and more tools, external context, and rules embedded in its system prompt. Eventually, the model loses track of countless tools available to it, starts forgetting what the goal in the initial user queries was, and well, generally falls apart.

However, there’s a solution in sight. How software engineers came up with microservice architecture patterns, similarly AI engineers are rediscovering very similar patterns in the age of agents – multi-agent systems, i.e. instead of a single agent with a set of toolsets, we build a set of specialised agents with task specific tools that each of them have.

In this post we’ll look at how and when to split the agents up, what A2A protocol gives us, and general multi-agent system considerations.

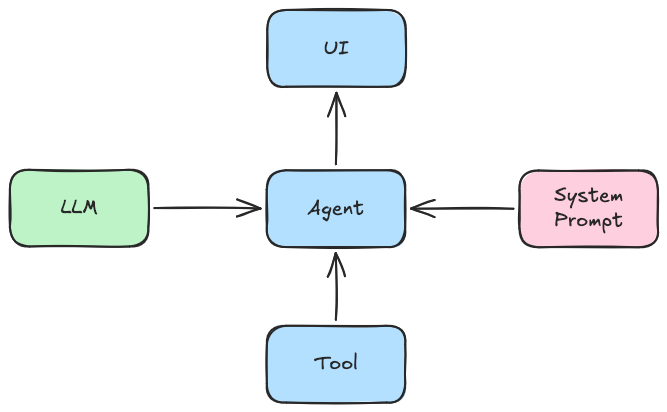

The “traditional” AI agent

Even though AI agents are still a rather new concept, many people have an image of what an AI agent would look like. It’s a script with a loop in it that waits for user input, and passes this input to the LLM. As a result the LLM will tell the script to use one or more tools (which are in essence just function calls), and respond to the user with the results.

The simplest case of an agent. An agent has a single system prompt and a single tool.

Consequently, the more things you want your agent to be able to do, the more tools and information you’ll need to give it, which leads to what’s known as context rot (Hong et al, 2025) : as the context grows, the model becomes less reliable at picking out and using the right information at the right moment. In plain terms, you keep adding “help”, and the system gets easier to confuse.

On top of that, many engineers would agree with applying the KISS (Keep It Simple, Stupid) principle here. We want our system not to be overly complex so it remains understandable and maintainable (amongst other things).

One could simply just split the large agent into a bunch of smaller separate agents and call it a day. But what if you actually need them to be able to fulfil a complex request that genuinely requires tools from different domains? In such a case, we’ll have to make the individual agents able to talk to each other.

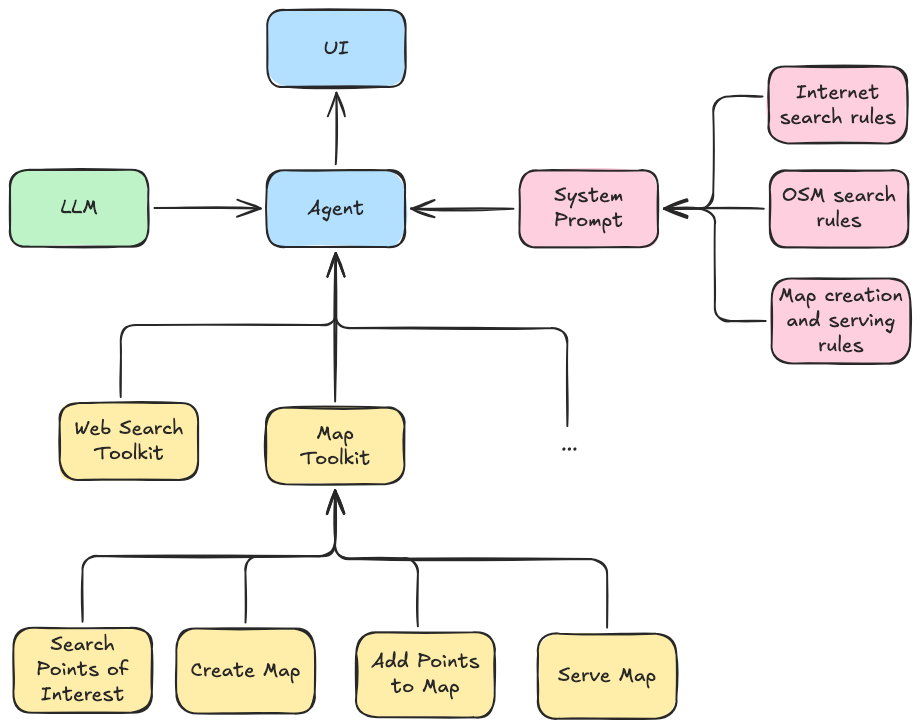

A practical example here would be a personal assistant, that I want to find the best specialty coffee shops in a certain location. Below you can see a diagram outlining how the agent would look like, if we wanted to do it in a single piece.

[The diagram of a single agent. The agent is given multiple toolkits: the Web Search Toolkit and the Map Toolkit. The system prompt consists of multiple rules, to cover different use cases.]

Further into the article, I will be talking about multi-agent systems, using terminology and notions from Pydantic AI, since I have the most experience with it out of all agentic frameworks. However, everything I’m covering is equally applicable to all other major frameworks too.

Agentic Workflows

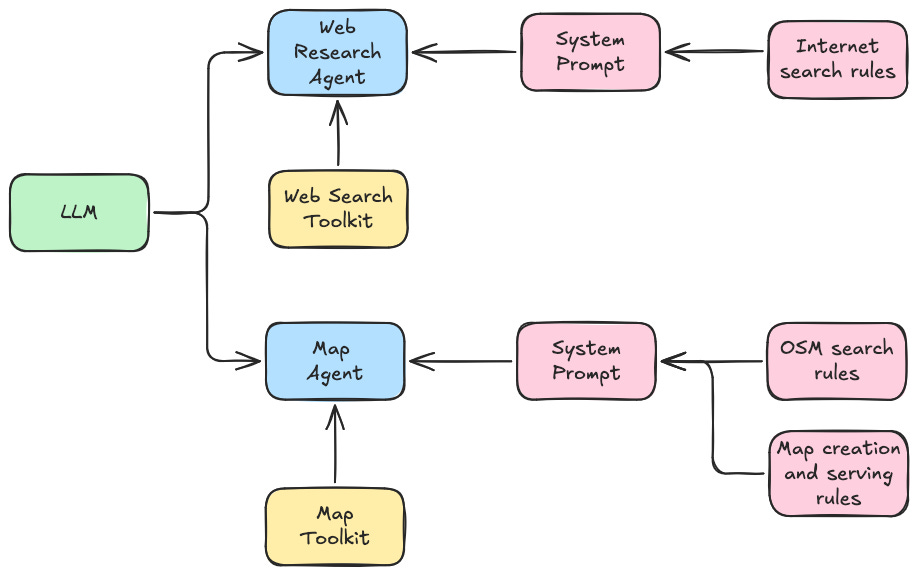

To solve this context rot problem when trying to integrate many tools in an agentic system we can set up a workflow that utilises multiple agents with different capabilities. First and foremost, we split the single purpose agents (namely Map and Web Search agents) out of our large super-agent. Each of them has their own system prompt, and is given different tools.

On my diagram below, both agents use the same model under the hood, but it’s not really required. If we know that some model performs better on a specific task, we can easily swap it for a different model. The same goes for when we realise that using a smaller model when it’s more cost effective, and performance isn’t significantly worse.

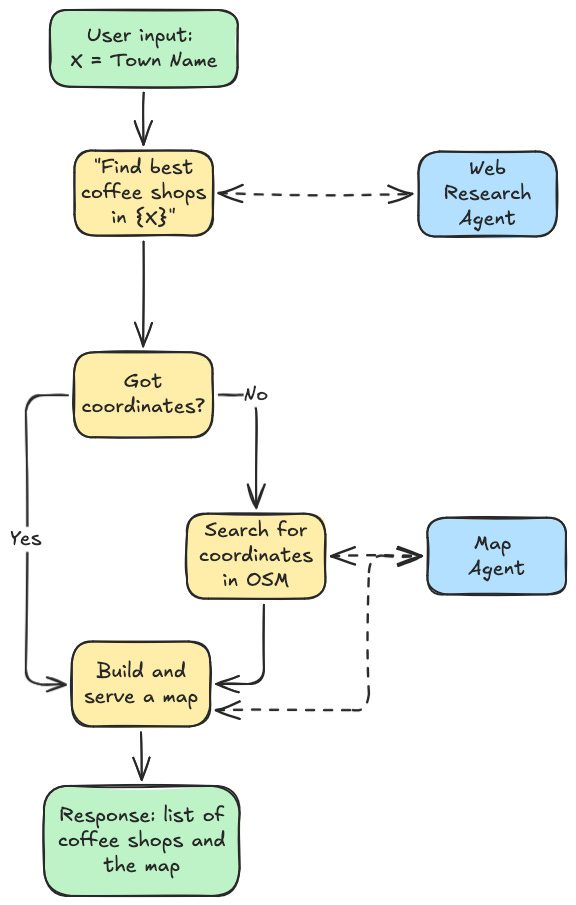

In the very simplest case then, we can write a simple script that asks the user for the input (e.g. what town they want to search in), and then calls appropriate agents in a deterministic order.

More often than not you can just stop here. You have a functional application that can solve a complex problem with ease. However, you can go a step further and ask “what if I need to answer more open-ended questions?”

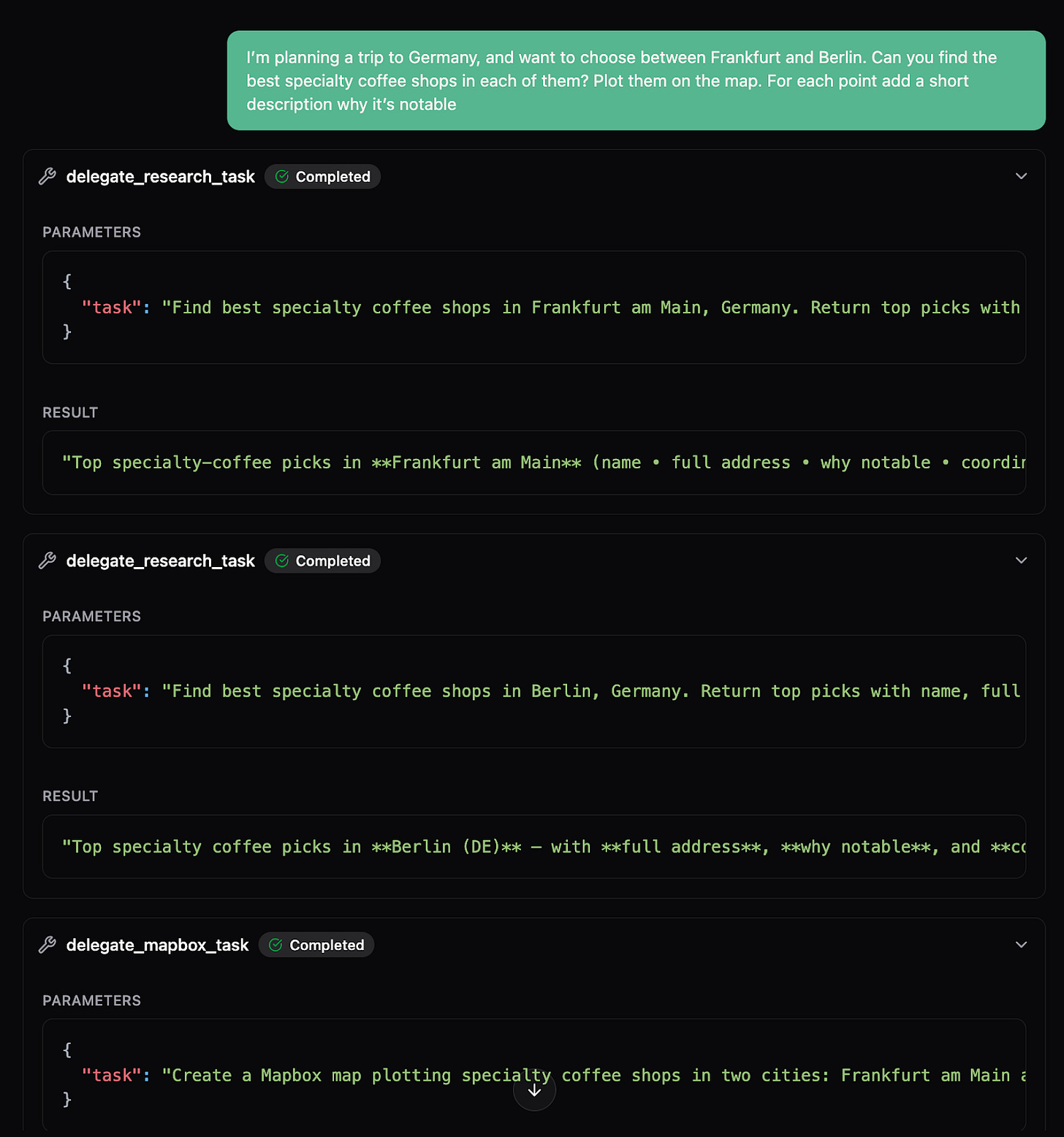

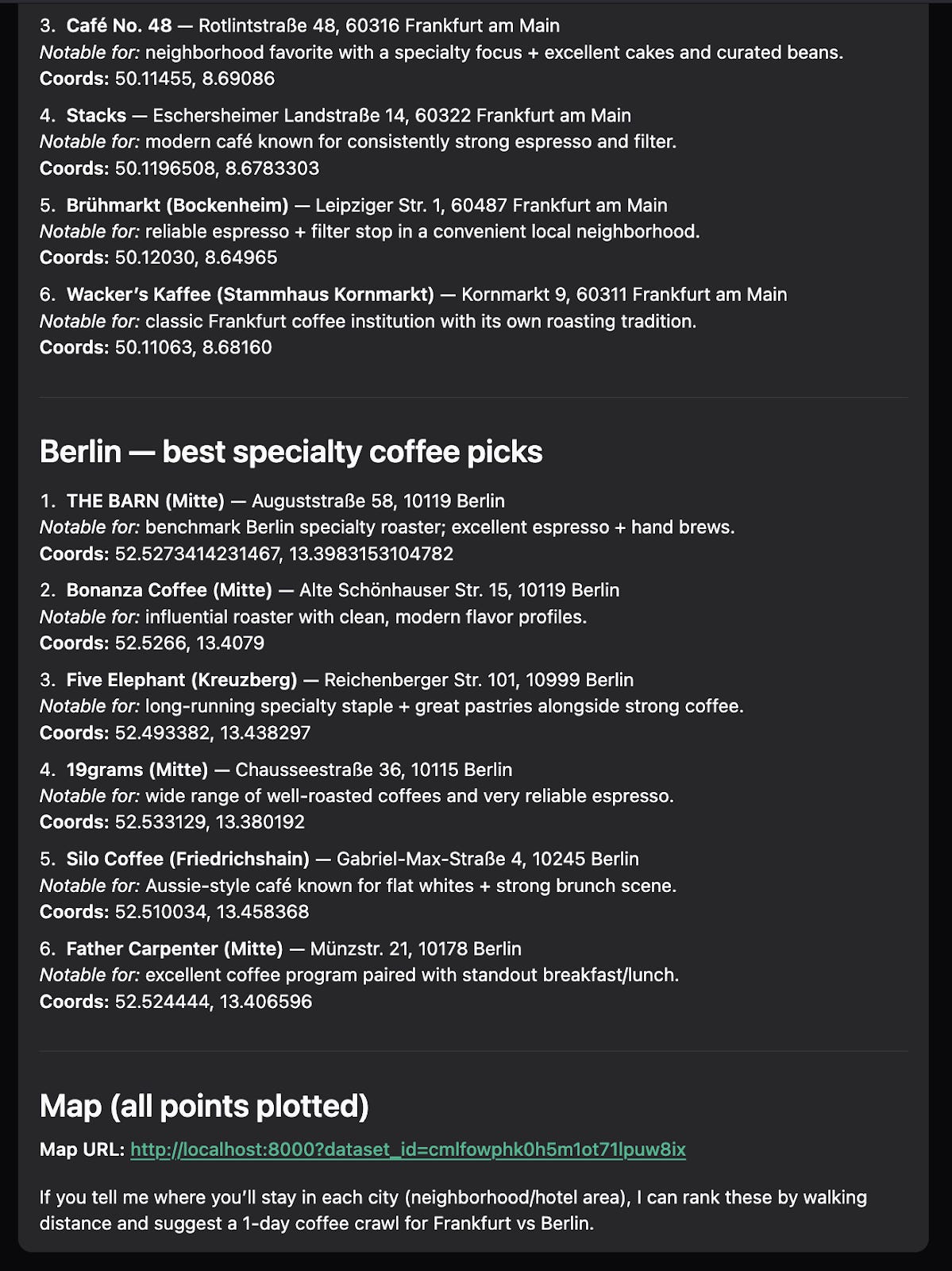

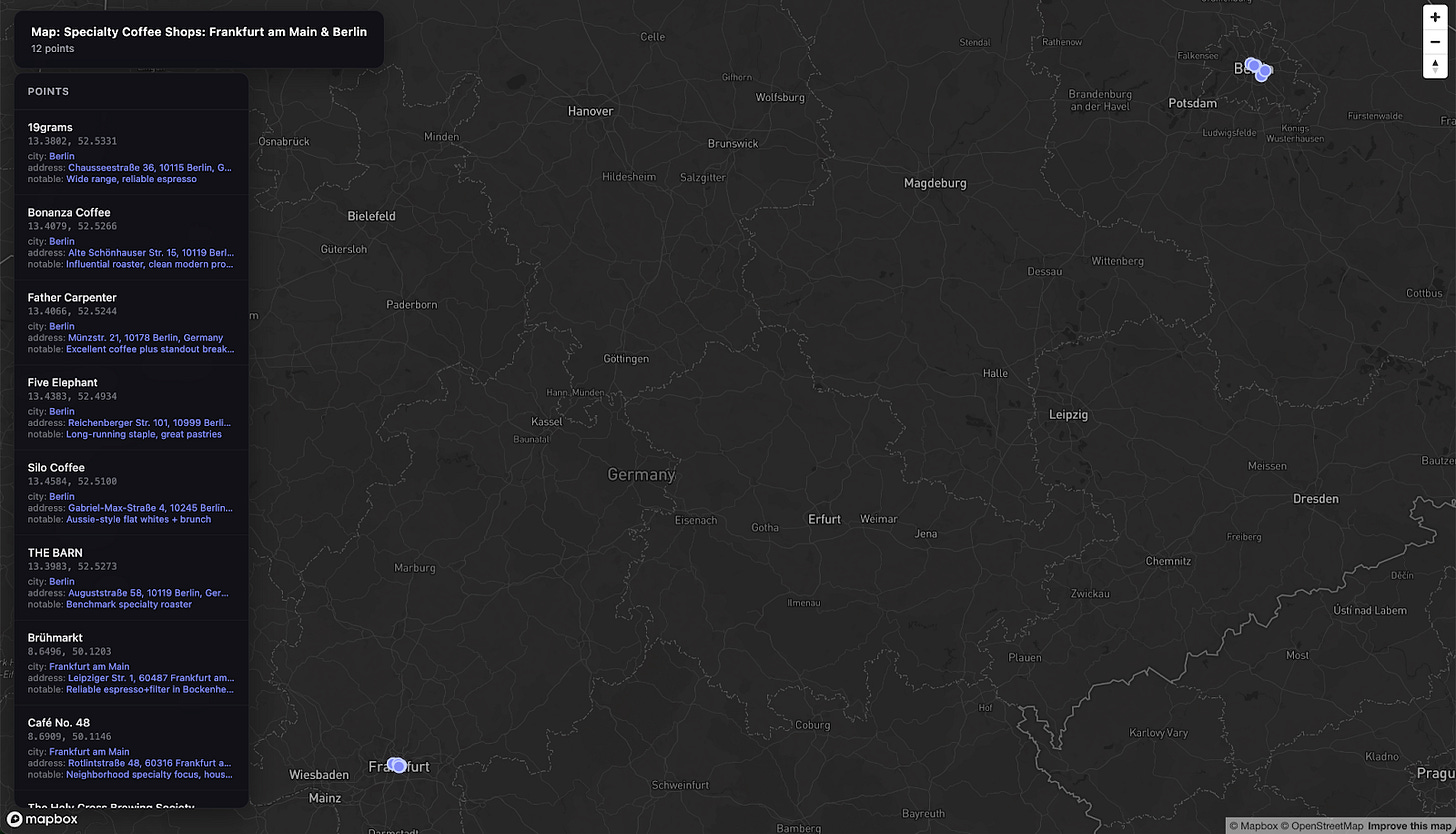

I hear you say, “I’m planning a trip to Germany, and want to choose between Frankfurt and Berlin. Can you find the best specialty coffee shops in each of them? Plot them on the map. For each point add a short description why it’s notable”. Unfortunately, we can’t really put that into a neat workflow.

Agent-to-agent delegation

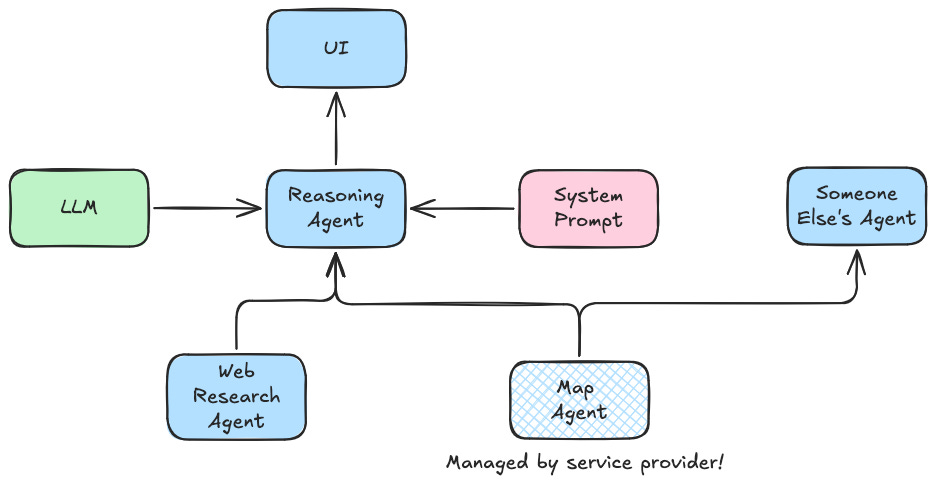

So what if we introduce a reasoning agent as the entry point? It figures out what to do with the query, splits it into sub-tasks, and delegates execution to specialised agents by calling them as tools.

Now if prompted well, the agent we talk to directly, should be able to identify when it should delegate, and collectively they should solve the task quite effectively.

From the very brief test on the screenshots above, you can see that it indeed can answer a reasonably complex query (doing multiple delegation calls before answering). And we can also talk a little about other benefits such a system has.

You can think of multi-agent systems in the same way as microservices. This architecture allows us to develop, test, and evaluate them independently – they are performing different functions after all. On top of that, agents don’t have to share an environment; the memory contents, including potentially sensitive information, can stay within the agent that needs it. Also, as previously mentioned, we don’t have to use the same LLM for every agent, which can be quite convenient.

A2A Protocol and Talking Machines

So far, our agents were defined within a single code base and run within the same process. This way of running multi-agent systems has its drawbacks: we cannot scale agents independently from each other, we can’t reuse agents in multiple different systems, we can’t use different languages and frameworks for different agents.

Now it’s a great point to talk about the A2A protocol. Developed by Google, it’s a standardised agent communication protocol, aiming to address these problems. An agent provides an “agent card” that, in short, advertises what this agent can do. Other agents can then send messages to delegate tasks. (If you want the quick analogy: it’s closer to an API contract than a new model capability.)

The agents don’t have to be written in the same framework, run on the same machine, or even be maintained by the same people. As long as there’s network connectivity between two agents that implement A2A protocol, they can talk to each other.

Even though it’s far from reality – you don’t see specialised agents with A2A enabled everywhere yet – we can imagine a world where our pondering agent talks to other specialised agents, some of which could be managed by others. Take for example our Map Agent, which essentially uses some map API under the hood. In theory, the map service provider could build and run the agent themselves, and make it available via A2A for other multi-agent systems to use.

Where we ended up

Thus we started with a simple idea of splitting up a mono-agent into sub-agents for the purpose of reducing context (and hence improving quality of responses), and ended up with a few other benefits on top.

Of course, there are drawbacks. First and foremost, we practically doubled the number of moving parts in our system, which doubles the number of places things can go wrong.

Also, even though this arrangement gives us greater flexibility, it’s a greater number of hyperparameters. It makes things harder to keep track of when experimenting, albeit not impossible: MLflow and Opik have very comprehensive tracing capabilities, allowing you to record all the queries, tool calls, and responses. If you set up your evals right, the sky is the limit.

Nevertheless, multi-agent systems appear to be a very powerful pattern akin to microservice architecture. And I’m very keen on seeing more examples of publicly available agents that implement A2A, making agent collaboration more accessible. As with any engineering pattern, I would be cautious to start with a multi-agent system if a single simple agent would suffice, as it would overcomplicate things. However, it’s useful to keep in mind that such separation of capabilities is there when necessary – and I hope, the article above illustrates when it might be the case.

Misha is a Senior MLOps Engineer here at Fuzzy Labs. His background spans computer science and bioinformatics. He studied at the University of Manchester and completed his Master’s at Imperial College London. Outside of work, he’s always on the hunt for the best cup of coffee, which may or may not have inspired all the examples in this MLOps.WTF edition.

And finally

What’s coming up

Our next MLOps.WTF meetup will be the 25th of March, back on our home turf at DiSH in Manchester.

This meet up will be focusing on Agentic AI in financial services, with an emphasis on how these systems are built, monitored, and governed once they’re running in regulated environments.

3 exciting speakers to be announced. Make sure you get your ticket!

🗓️ Wednesday 25th March — Manchester

About Fuzzy Labs

We’re Fuzzy Labs, a Manchester-based MLOps consultancy founded in 2019. We’re engineers at heart, and nerds that are passionate about the power of open source.

Want to join the team? You’re in luck!

Open roles:

Not subscribed yet? Why not? All this MLOps goodness straight to your inbox!

The next issue will be the next in our agents in production series, with Oscar taking on Evaluating AI agents. Definitely one to watch out for.

Or equally, why not follow us on LinkedIn to see more BTS bits and pieces, alongside updates around future events and thought pieces 🍅.