It’s Machine Learning but is the Machine Learning?

MLOps.WTF Edition #6

Ahoy there 🚢,

Matt Squire here, CTO and co-founder of Fuzzy Labs, and this is the 6th edition of MLOps.WTF, a fortnightly newsletter where I discuss topics in Machine Learning, AI, and MLOps.

In 1822 at the Royal Astronomical Society, Charles Babbage unveiled a new invention: a machine that could tabulate mathematical functions. One significant application of Babbage’s machine was to the computation of astronomical tables — that is, tables showing where certain stars and planets will rise and set on each day of the year.

This was a big deal, not just for astronomy fans, but more significantly for sailors, who relied on these tables for navigation. Babbage’s Difference Engine caught the attention of the British government too, who started to fund his work. At the time, astronomical tables could only be produced by hand; a machine would not only save money but also be of vital strategic importance to an empire built on maritime supremacy.

You know how people say “there are no stupid questions”? Well, Babbage didn’t agree. In his autobiography, he wrote:

On two occasions I have been asked, — "Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?" In one case a member of the Upper, and in the other a member of the Lower, House put this question. I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question.

Of course, we all love making fun of politicians. But looking beyond that, I wonder, is this question as silly as it seems? Nobody had seen anything like the Difference Engine before, and had no mental model of its inner workings; it might as well have been magic to them. Besides, us humans sometimes do get the right solution to a problem in spite of incorrect, or at least incomplete, inputs; even some politicians!

While it’s clear to us today — as it was to Babbage — that a calculator can’t give you the right answer with the wrong inputs, when it comes to AI systems, similar questions occur more often than you might think, and the response is somewhat more complicated.

One question we’re often asked goes something like this: “once the AI is deployed, will it keep learning?”. This isn’t a bad question: in deployment, a model sees so much data, so it’s not unreasonable to imagine it using that input stream to learn and improve — isn’t that what AI does?

As ML practitioners, we understand that this simply isn’t how it works. Typically we train a model once, and deploy it. Once deployed it’s static; it doesn’t learn new things in production. Sure, we can train a new version and deploy that, but once deployed, that model doesn’t change.

But this mode of learning doesn’t sit right with our intuitions. Yann LeCun estimates that a child will receive 1E15 bytes of input through their eyes alone by the age of four, and already that’s 50 times more data than our largest LLMs are trained with. The child learns continuously, figuring out everything from intuitive physics to language from noisy input data.

Why don’t our AI systems do likewise? There are several approaches that deviate from the batch-style, offline learning paradigm.

Perhaps the most robust is continual learning, where models are retrained on new data, either on a fixed schedule, or when a detected change in data distribution triggers the change. This gets us closer, but it’s still essentially offline learning with cron jobs.

There are also more exotic techniques, such as online learning, where models update in realtime as new data arrives, and reinforcement learning, where models are able to interact with the world and gain rewards for finding strategies which make them more successful at their goal.

However, these techniques are not as ubiquitous as you might expect, and there’s a few reasons for that.

To begin with, there’s the problem of ground truth. Your deployed image classifier might be seeing thousands of cat pictures per hour, but if you’re never telling it which pictures are cats and which aren’t, how could it learn from these? Typically applications of online and continual learning are limited to domains like time-series prediction, where inputs serve both as training variables and target variables. Cases where metrics can be collected later about model performance, like recommendation engines, work well too.

It’s also just a lot harder to engineer. With online learning, you have to strike a tricky balance between updating the model to account for new information and remembering patterns from further in the past in case they should arise again. And you have to do this in real time. It’s much simpler to rely on conventional methods, training and evaluating your models offline before putting them into action.

Despite all of this, we can’t help thinking that there’s something seductively elegant about a system that when deployed, and left unattended, it just learns, updating its parameters over time.

Right now there isn’t a great deal of MLOps tooling to support online learning, but that may change in the future. Among other things, model monitoring will be incredibly important, because not only can the data change in distribution over time, but so will the model. Presumably model versioning will look quite different in this world too, and perhaps we’ll just think of the model plus the most recent N observations (weighted by recency) as a long-running time series.

If you’re deploying online learning systems today, we’d love to hear your thoughts. Where do the tools need to be improved, in your experience?

And if you’re wondering what happened to Charles Babbage, the next machine he proposed, the Analytical Engine, would have been remarkably close to modern-day computers, though sadly it never got built. But he did get to collaborate with Ada Lovelace, who casually invented programming somewhere around 1842.

Thanks to Danny Wood from the Fuzzy Labs team for co-authoring this edition!

And Finally

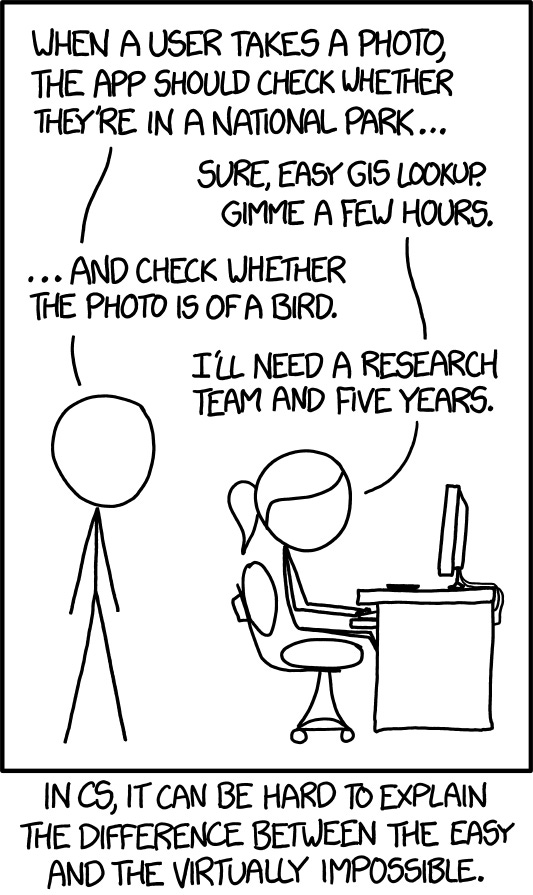

It’s amazing how quickly we go from being blown away by new technology to completely taking it for granted. Devoted XKCD followers will recognise this panel, which turned ten years old this week.

At that time, we were just about getting to grips with image classification as a thing, you could probably tell if an image was of a bird, but telling whether there was a bird anywhere in an image seemed like it was still out of reach. Now, it seems like a trivial endeavour.

But as Simon Willison points out in his blog, the larger point of the comic still stands: To non-technical people, the difference between things that are trivial and things that are nigh impossible often seems arbitrary or nonsensical, and this is exacerbated by LLMs, whose capabilities and limitations are even harder to wrap your head around.

https://simonwillison.net/2024/Sep/24/xkcd-1425-turns-ten-years-old-today/

Thanks for reading!

Matt

About Matt

Matt Squire is a human being, programmer, and tech nerd who likes AI, MLOps and barrel ponds. He’s the CTO and co-founder of Fuzzy Labs, an MLOps company based in the UK. He’s also getting married in a couple of weeks and then going on safari so who knows when the next newsletter will be out. BTW - Fuzzy Labs are currently hiring so if you like what you read here and want to get involved in this kind of thing then checkout the available roles here.

Each edition of the MLOps.WTF newsletter is a deep dive into a certain topic relating to productionising machine learning. If you’d like to suggest a topic, drop us an email!